Data can be powerful.

But does your data pose a risk to analysing risk?

To make smart risk decisions at pace, you need data you can trust - data that shows where things are, precisely. Three things stop you from being competitive. All three challenges can be overcome with simple solutions...

Risk manager, data manager, underwriter or procurement professional – if you're calculating risk for insurance, then it's essential to have location data you can trust. Being able to identify addresses accurately, with ease, helps you to be more effective and efficient, too. And the right location data can also help you discover unexpectedly profitable insights.

However, there are three challenges that stop most insurers either from using their data to best effect, or from achieving a competitive advantage due to their own data becoming a risk.

Excessive expense

Everyone has a budget, but data procurement costs can spiral when a team doesn't stay on top of its data spend and understand what it's buying, and why. It’s easily done.

Often, risk teams fall into the trap of licensing more data than they need. Traditionally, for example, a team will license a full set of attributes or data for a very large area. For insurers calculating granular risk through multiple datasets, the access to myriad factors is certainly essential - but data curation has moved with the times, and it is now possible to license data subsets with far more flexibility built in to the contract over time, and target areas.

Another area where overspend can happen, is the licensing itself. With multiple teams working on different aspects of the same risk, or many teams in a business using siloed data for product security for example, crossovers in licencing can happen.

Sub-standard quality

To gain a competitive advantage, risk calculations must be as accurate as possible. Nothing less than precisely geo-referenced data will do - but not all location data is of the same quality.

A case in point: many address datasets use the Postcode Address File (PAF), the database of all known 'delivery points' in the United Kingdom. The PAF is a collection of over 29 million Royal Mail postal addresses and 1.8 million additional postcodes, and those postcodes are all oriented to a location within an irregular polygon - the PAF doesn't actually specify a location, not precisely.

That makes a huge difference when you're calculating risk at property level. There is a world of difference between "right next to the river and liable to flood", and "right next to the river but is a Victorian terrace, situated 35m above the highest recorded flood level."

Pinpoint property location is the key to most risk assessment for individual properties. The numbers of bathrooms, bedrooms, basements and sub-basements; the extent of boundaries, proximity to trees, and the extent of tree canopies; flood types, subsidence risk related to current and previous year weather conditions… the list goes on. But granular information is only one part of the story.

As more insurers discover the value in granular location data, the true gains are being made in combinations of detailed datasets. The finer points of a risk assessment may only reveal themselves through the comparison of two or more datasets - and that leads to the third challenge.

Joining the dots

There's no better way to describe it. Making strong links - joining the dots - between entries in vast property, location, spatial and risk datasets is a costly and time-consuming task for any business. There's also an increasing need to add more environmental and geological considerations into the mix than ever before, and subsidence and geoclimatic data, commercial ownership data, title and tenure data, flood risk mapping, and UK crime modelling can all tip the balance in a risk analysis. It's essential to join the dots accurately - and to keep the costs down, at the same time.

At this point, some insurers struggle due to the sheer magnitude of data in play and the number of teams needing to access it, efficiently. It is true, a strong business model will factor those costs in, but the market is tight. Competitive costing is also key, which begs even more questions – where have datasets come from? Are they all sourced centrally? How are they being compared, or linked? And if there's more than one supplier, what are the guarantees around data quality and consistency?

Anyone who handles data for risk management knows currency may be the biggest challenge in linking datasets with confidence.

The answer to all three challenges

Expense, quality, and joining the dots. Three challenges, all rolled into one. However, there is an effective way of overcoming them. It involves choosing licencing that works the way you do, procuring data that's curated for accuracy and currency - and, more importantly, paying for access to the property attributes that are relevant, not the ones that aren't - and making sure the Unique Property Reference Number (UPRN) is integrated at source, as the key to linking datasets with confidence.

In fact, done properly, the act of overcoming challenges that are holding the quality of your risk analysis back can also pinpoint hidden risks, surface value, and cut costs at the same time.

Latest News

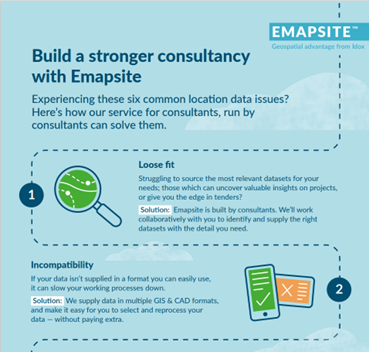

Six Location Data Challenges for Consultancies | Emapsite

Location data is critical to consultancy work, yet common issues like outdated datasets, licensing uncertainty, and incompatible formats can slow projects down and increase risk. This infographic explores six of the most frequent location data challenges faced by consultancies today — and how teams are overcoming them with reliable, compliant, and easy-to-use spatial data.

Read Full Article-

Future-proofing Assets Against Flooding in Britain

Read Full Article -

Why address data is the unsung hero in the UK’s new-build recovery

Read Full Article