Enterprise brain damage and the data wonks

Boom – and there it was! Let me tell you a story: a FTSE company was concerned it wasn’t curating internal data as well as perhaps it might be. To date, three layers of social data and some real time analysis had been used as an overlay on a map, providing a simple dashboard that a management team could use to visualize customer vulnerability.

A relatively easy task you might think, but the data team wasn’t producing the expected results. Emapsite was asked to line it all up and make it work, which we did with ease … but if it was that easy to fix the problem, why wasn’t it possible for the internal team to achieve the same results?

Results depend on data AND skills

Well, there’s a common friction point for many enterprise-sized businesses. It’s hard to manipulate and present business intelligence well, because the people wrangling that data aren’t working as close to each other as they could be. The frustration is felt most between Proposition and Customer Facing teams, who both want innovation and optimum results from internal data. Unfortunately, the results they do get are often cludgy, fudgy and late.

In fact, I’ve been rereading Richard D’Aviani’s Hypercompetition, and it reminds me of a trend we’re seeing in three unrelated industries in our customer base. It’s something that’s causing significant change and, as one CTO put it, “a level of enterprise brain damage” that she’d never seen before.

This enterprise brain damage – the deadening of a business’s top-down capabilities – arises due to the widening divergence between the company’s technical data-wrangling capabilities and its ability to deploy that skill effectively as a fast-track to improvements in risk management, better customer analysis, and more efficient asset management. Companies want a competitive advantage [or relief from regulatory pressure]; they’re looking at their data for intelligent answers that will make sense right across a portfolio … and that data, for all kinds of various reasons, is hard to wrangle and work with effectively because, in the first place, it’s not fit for purpose.

So, the natural flow of events is this: a first wave of competition causes price cuts. A second wave maintains margins by cost cutting. The damage that’s being done internally – due to that disconnect between data and skillsets – means that responses and answers to these commercial challenges are retarded by a lack of innovation capability. In turn, this causes an ever-widening gap between the CEO’s appetite for information-driven progress and the poor old data team, taking the blame, as they’re failing to pivot under this new and frightening spotlight. However, there are many ways to overcome this problem.

Marginal gains, maximum impact

In the insurance sector, for example, we’ve helped many exemplar customers to get their internal data ready for use with external analysis to help write better policies. When the data is up to scratch, machine learning can then filter out customer responses that can’t be true, such as, “I park my car on the drive” [our data work will enable the business to determine whether or not the policyholder has a drive]. Niche answers like this deliver the marginal improvements in pricing – and the decreases in exposure for a book – that are needed to deliver significant competitive gains.

For utility firms and in the water sector, we are seeing a pre- and post-COVID 19 effect. There’s not as much appetite for onsite surveyed solutions; there’s a greater propensity to ‘let the computers do the heavy lifting’. The utility firms want to lose less water or dig up fewer cables each year, using a blend of internal asset information and external analysis to reduce their risks and costs. They know that spatial analysis can help them, but here’s the problem. The part of the business that needs to provide the essential, internal data cannot do that – usually due to lack of care, or skills, or due to previous changes in company structure … none of the cumulative data is interoperable.

In real estate, the Agent’s personal insights are slowly being replaced as the corporate approach centralizes market intelligence and utilises that for better collective wisdom. This means that the faster, better-equipped corporate firms can draw ahead of those smaller companies who may be a treasure-trove of local data – but it’s locked away in silos.

By way of an example: we also have an international firm that’s asking us to add value by mapping their internal data assets to third party and open data … enabling them to spot millions in additional advisory and transactional fees that wouldn’t be visible to a tribe of solo dealers. It’s all about increasing your computing capacity, by which I mean having the experience and ability to visualise and harness the value that’s hidden within your internal data.

Closing the gap

In this race for competitive advantage then, the ‘enterprise brain damage’ stems from Business Unit Heads that can’t understand why their ‘simple’ request for data-driven business intelligence is so hard to deliver. Hence the chasm that opens up between the data teams and the many stakeholders whose response to competitive pressure is, or would like to be, data driven.

“Oh, and can you make a predictive model for me?” – BANG! – there goes the CTO’s smartwatch suggesting a lie down to assuage the blood pressure spike.

What’s needed, is a tried-and-tested way to close the gap. Spatialisation – using location data to derive insights – and good, high quality visualisations are multi-dimensional ways to achieve this. There’s a lot more to spatialising data effectively than connecting territories to sales stats. To be done properly, it takes lots of data and basic computing power. That limitation was once the problem but cloud computing resources being easier to access now, it’s no longer the defining factor. The thing that’s actually holding businesses back – causing the enterprise brain damage – is the desire to deliver in-house solutions from an underfunded, inadequate talent pool that can’t respond fast enough or well enough to the problem in hand. And, as it’s second nature for most businesses to pivot or flex in a fast-paced competitive market, that gap is in danger of growing exponentially unless agility, interoperability and a nexus for innovation gets introduced in a robust, planned way. So, how do we do it? How do we introduce data-wrangling capability effectively into an enterprise business, particularly one that’s using data from very many different departments?

Call me. I’ll explain.

Rich Pawlyn, Managing Director at emapsite, with over two decades' expertise in solving, scaling and building geo-data intelligence solutions. https://www.linkedin.com/in/richpawlyn/

Latest News

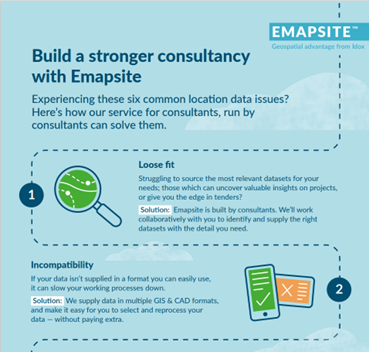

Six Location Data Challenges for Consultancies | Emapsite

Location data is critical to consultancy work, yet common issues like outdated datasets, licensing uncertainty, and incompatible formats can slow projects down and increase risk. This infographic explores six of the most frequent location data challenges faced by consultancies today — and how teams are overcoming them with reliable, compliant, and easy-to-use spatial data.

Read Full Article-

Future-proofing Assets Against Flooding in Britain

Read Full Article -

Why address data is the unsung hero in the UK’s new-build recovery

Read Full Article